Inside the Red Team Toolbox: Linux Info-Gathering

In the realm of red teaming, rapid and efficient information gathering is very important.

To streamline this process, we’ve developed Vermilion, a lightweight post-exploitation tool for the rapid collection and optional exfiltration of sensitive data from Linux systems.

A significant percentage of computational workflows worldwide run on GNU/Linux.

Primarily used in servers and increasingly in workstations, these machines often become treasure troves of sensitive information like configuration files, passwords and tokens, due to their integration with critical infrastructure.

While various C2 frameworks such as Sliver, Cobalt Strike, Havoc, and Metasploit exist, Vermilion is not positioned at the same level.

The rationale behind our tool is far more modest: during engagements, a Linux machine may become compromised with only a brief and limited window of access.

In these cases, having a quick, automated mechanism to swiftly collect as many sensitive files as possible (and potentially exfiltrate them if a remote endpoint is already set up) becomes invaluable.

Remote network access is often not an option, particularly in scenarios involving physical access to air-gapped machines. In these situations, carrying a lightweight binary capable of creating an archive with all sensitive files and configurations from a Linux machine can be a lifesaver.

Understanding Vermilion

Vermilion is engineered to automate the retrieval of critical system information and sensitive directories, including:

shell historyenvironment variables.ssh.aws.docker.kube.azure/etc/passwd/etc/groupmany others

Upon collection, Vermilion compiles these into a compressed archive. For scenarios requiring data exfiltration, it offers the option to send the archive via an HTTP POST request to a specified endpoint: It’s important to note that setting up the exfiltration web service is beyond Vermilion’s scope; users should implement their own servers to handle incoming data.

Our red team has developed Infrastructure-as-Code automations to deploy public endpoints for exfiltration purposes, either on cloud providers or on local infrastructure leveraging tunneling tools such as Cloudflare Tunnels or Ngrok.

A Practical Application: Identifying Misconfigurations in GitHub Actions

Even in the early stages of development and testing, Vermilion proved to be extremely useful, including enabling us to identify a weird, sub-optimal configuration in GitHub Actions’ cloud-hosted runners.

Specifically, after testing the tool on Linux servers, we decided to launch it inside a GitHub Action to determine whether it was possible to exfiltrate any sensitive files or configurations from the runner’s environment.

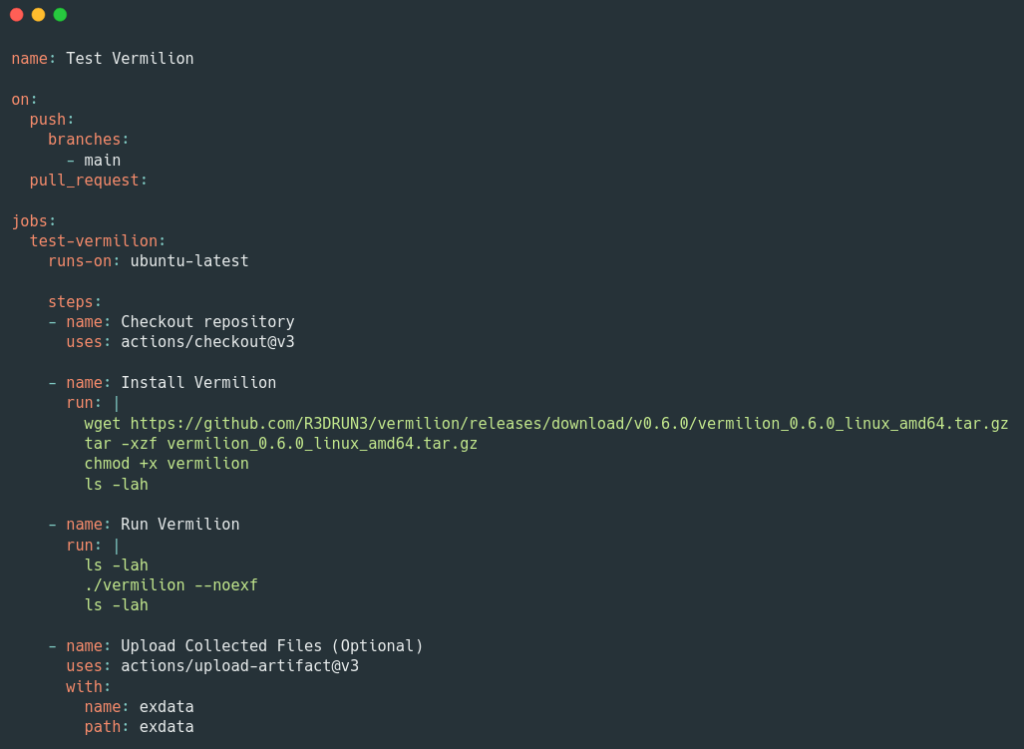

Here’s the GitHub Action we used:

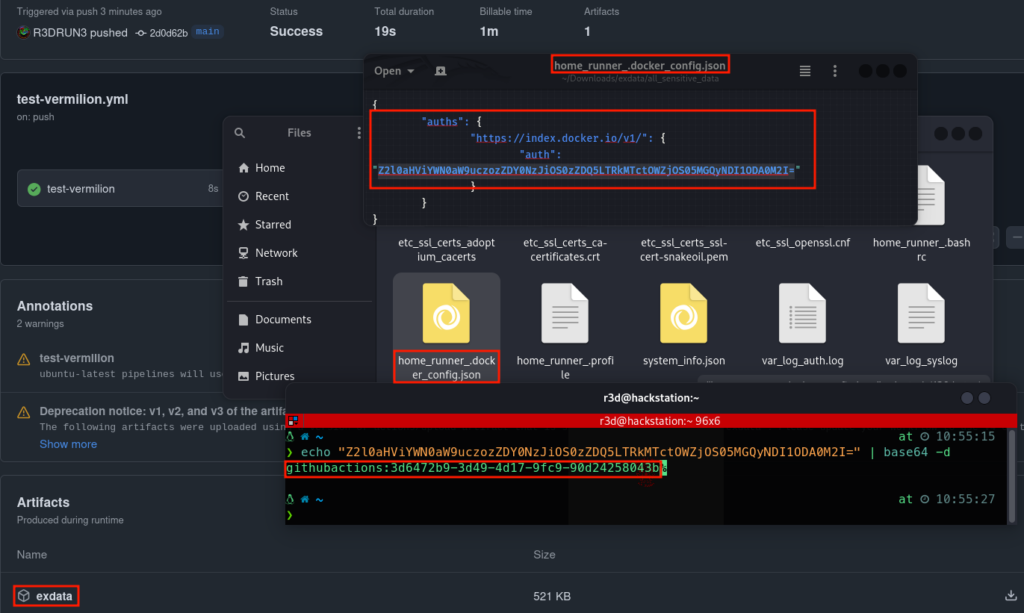

Subsequently, we discovered something we were unaware of and, frankly, did not expect to encounter. From the extracted archive, we can open the docker_config.json file and read docker hub credentials:

This is the related dockerhub user: https://hub.docker.com/u/githubactions

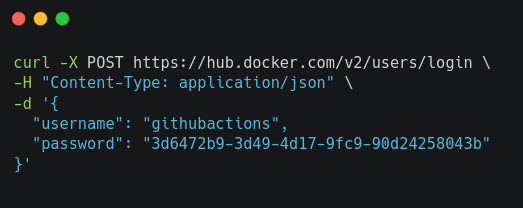

These credentials are hardcoded and common to all github action hosted runners!! Using those credentials, one can retrieve a JWT auth token from dockerhub via the following command:

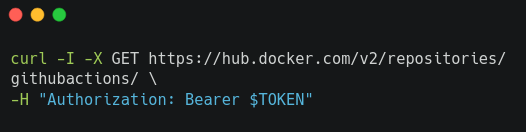

Once we have the token, we can test the rate limit via the following command:

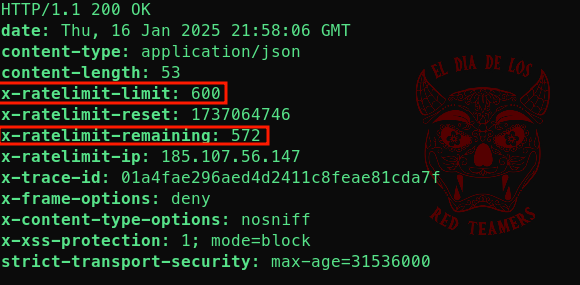

example output:

When attempting to pull Docker images using these credentials, the x-ratelimit-remaining header values decreases with each request (we also tested this with a script to pull many images in parallel, making the remaining rate limit decrease fast).

By monitoring the aforementioned HTTP call, even without taking any action with the credentials it can also be observed that the value steadily decreases on its own: this is likely an indication that some GitHub Actions are running and pulling images.

We thoroughly tested the capabilities of these credentials, and it seems they are only functional for pulling images. Note that DockerHub imposes pull image limits, even for authenticated business users.

Impact

The ratelimit seems to reset once every ~2 minutes, but this behavior still reveals a potential vulnerability: an attacker could exploit these credentials to exhaust the rate limit, potentially causing a denial-of-service (DoS) for GitHub Actions by depriving legitimate workflows of access to Docker resources.

This appears to be an unusual design choice by GitHub, as it introduces the potential for a single point of failure.

Even if that’s not the case and they have implemented mitigations against potential DoS or DDoS attacks, the fact remains that these credentials are shared across all instances of all runners, throughout the universe. This means they can be read and hijacked by anyone, even if only to bypass rate limiting for unauthenticated calls to DockerHub.

A question naturally arises: what would happen if, starting tomorrow, anyone in the world with workflows pulling images from DockerHub began using these credentials?

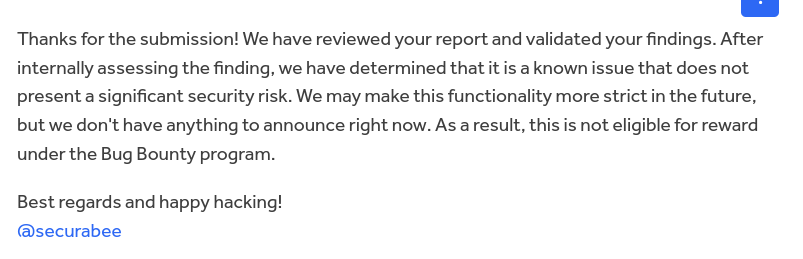

Following this unexpected discovery, we submitted our finding to GitHub, through its Bug Bounty program. They informed us that they would verify the discovery and, after a week, this was their response:

It’s important to highlight that they acknowledge this as a known issue and are likely to address it with future hardening measures.

Conclusions

Vermilion offers a lightweight and efficient solution for quickly gathering sensitive data in scenarios with limited access. Its development was guided by the need for simplicity and speed and, from the very first tests, it has proven to be a valuable tool, enabling the discovery of strategic insights.

While Vermilion is not a replacement for full-fledged C2 frameworks, it serves as a practical tool for targeted use cases, particularly when time and access are constrained.

These Solutions are Engineered by Humans

Did you learn from this article? Perhaps you’re already familiar with some of the techniques above? If you find cybersecurity issues interesting, maybe you could start in a cybersecurity or similar position here at Würth Phoenix.