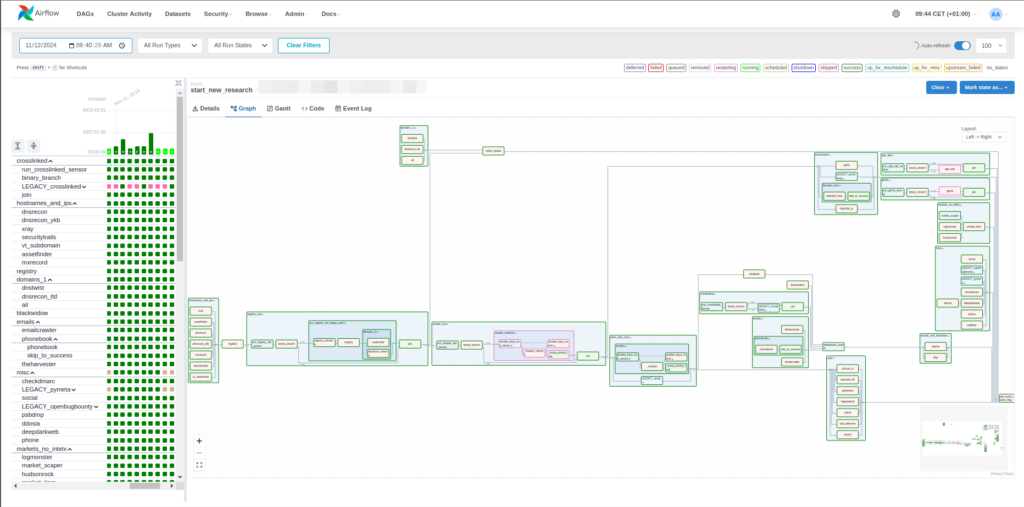

Scaling SATAYO: OSINT Research with Apache Airflow

Originally developed as a proof of concept, SATAYO was designed to gather and analyze OSINT (Open Source Intelligence) data on a single machine. Initially, the platform functioned as a single-threaded script, and scaling was only considered later. As SATAYO’s capabilities evolved to meet the needs of more clients and monitor a greater number of domains, the team expanded the setup from one machine to multiple ones. However, the core design remained limited in scalability due to its single-threaded nature.

In this article, we’ll see how we transformed SATAYO from a limited, single-threaded setup into a scalable platform capable of handling thousands of OSINT tasks each day. We’ll explore the architectural challenges we faced, the key prerequisites defined for modernization, and how the adoption of Apache Airflow allowed SATAYO to meet the demands of a growing client base while improving performance and maintainability.

Initial Project Architecture

In its expanded setup, SATAYO’s architecture consisted of three types of machines:

- SATAYO Frontend: Hosts the web server that customers and analysts interact with, this machine also manages user access to SATAYO’s data. It directly interfaces with the database, to retrieve or store results, and requires read access to the shared storage where analysis results are saved.

- SATAYO Utility: Responsible for tasks that could be scaled horizontally, the utility machine handles relatively lightweight, batch-processing tasks such as scraping websites or monitoring specific online channels. Similar to the frontend, it communicates with the database to upload data from its tasks.

- SATAYO Robot: The Robot machines handled domain-specific, sequential tasks that are typically more complex and computationally intensive. Due to the linear execution requirements, these machines rely on an implicit dependency model to process domain-related phases in a predefined order.

These machines rely on a shared SQL database to track client information, domains, and OSINT research activities. The database also stores metadata, including the status of each phase or task executed by SATAYO. For this modernization we focused on the way tasks are executed by the SATAYO Robot machines.

Limitations of the Initial Architecture

Although adding machines enabled SATAYO to process more domains, this incremental scaling presented several issues:

- Single-Threaded Execution Constraints: The initial single-threaded design meant each task had to run sequentially, creating inefficiencies when scaling horizontally across multiple machines.

- Resource Wastage: Each machine ran on fixed schedules, with tasks waiting in queue even when they could be executed in parallel, resulting in wasted computing power.

- Complex Maintenance and Scaling: Adding new machines to handle more domains required manual setup, with each machine needing configuration for task distribution, security, and dependencies.

- Database Bottlenecks and Log Management: Storing all logs and task statuses in the database added significant load and created potential bottlenecks, especially as SATAYO expanded to analyze more domains.

To address these limitations, SATAYO needed a scalable architecture that could effectively manage dependencies, distribute tasks, and streamline resource usage across multiple machines.

Requirements and Solution Analysis

Before starting this development, we outlined the following key prerequisites:

- Efficient Resource Utilization: The platform needed to maximize resource use by allowing tasks to run in parallel, minimizing idle time and resource waste.

- Task Dependency Management: SATAYO’s tasks often depend on a specific sequence. The new solution had to allow precise management of these dependencies.

- Scalability: Horizontal scalability was essential. The new system needed to allow for additional nodes to meet workload demands without excessive setup or reconfiguration.

- Open Source and Community Support: A preference for open-source solutions ensured flexibility and cost-effectiveness, while a strong community meant access to support and ongoing improvements.

- Task Isolation: Some SATAYO tasks require isolated environments for specific network or resource configurations. A solution that easily allowed containerization was essential.

- Comprehensive Monitoring: Monitoring capabilities were necessary to maintain system health and promptly detect issues.

Why We Chose Apache Airflow

Apache Airflow was selected as it met these key requirements effectively:

- Directed Acyclic Graphs (DAGs) allow clear and manageable task dependency definitions, which are crucial for SATAYO’s structured workflows

- Native Horizontal Scalability ensures SATAYO can easily expand its processing power by adding nodes

- Python Compatibility aligns well with SATAYO’s existing code, enabling a smooth transition

- Flexibility for Task Isolation with options for Docker and Kubernetes lets SATAYO maintain isolated environments as needed

- Robust Monitoring and an active open-source community round out Airflow’s suitability as a scalable, reliable orchestration solution

Airflow’s features collectively made it the optimal choice to support SATAYO’s modernization goals.

New Architecture of SATAYO

The new SATAYO architecture, implemented with Apache Airflow, moved away from a fixed-interval task execution model to a DAG-based system. Key components included:

- Containerization with Docker: By using Docker, each task can run in an isolated environment, ensuring compatibility across different setups

- Airflow Executors and DAGs: Airflow’s DAGs allowed tasks to be executed based on dependency graphs, making it easier to manage and scale processes across different domains and clients

Scheduled Tasks and Ad-Hoc Searches

The new SATAYO system uses Airflow mainly to schedule two types of searches:

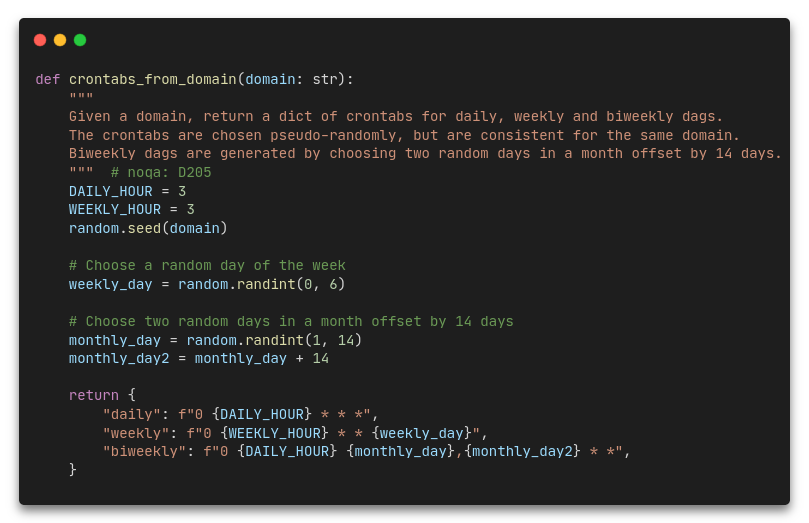

- Recurring Searches: SATAYO’s recurring searches are now scheduled based on a pseudo-random distribution to optimize resource usage

- Ad-Hoc New Research: For new or on-demand searches, Airflow triggers new DAGs that initiate complete scans and the analysis of specific domains

System Maintenance and Testing

The SATAYO upgrade involved rigorous testing and validation, with a Canary Deployment strategy used to roll out changes gradually. Additionally, Grafana-based dashboards were created to monitor system health and task status, giving administrators real-time visibility.

Future Enhancements

While Airflow has significantly improved SATAYO’s scalability and efficiency, future improvements could further enhance the platform:

- Kubernetes Integration: Transitioning to Kubernetes for container orchestration would improve Airflow’s fault tolerance and scalability

- Enhanced Log Aggregation: A centralized log management system could be integrated, making it easier to identify and troubleshoot issues

- CI/CD Pipeline: Automating deployment and testing through CI/CD would streamline updates and improve reliability

The updated architecture has laid a foundation for SATAYO to support growing cyber threat intelligence needs, positioning the platform to handle larger client volumes with greater resilience.

If you’re interested, you can read more about it in my Bachelor’s thesis: https://github.com/ardubev16/BSc-thesis/releases/download/v1.0.0/bevilacqua_lorenzo_computer_science_2023_2024.pdf

These Solutions are Engineered by Humans

[P] Did you find this article interesting? Does it match your skill set? Programming is at the heart of how we develop customized solutions. In fact, we’re currently hiring for roles just like this and others here at Würth Phoenix.