How to Use Tornado for event based monitoring with Icinga 2 (Part 2/2)

Tornado is the spiritual successor of the NetEye EventHandler. Tornado is an open-source, rule based Event Processing engine designed to handle up to millions of events per second. We can leverage this capability to ingest all possibly interesting events of our entire infrastructure, and react to the effectively interesting ones.

In this blog post we will explore the possibility to use tornado to build a good starting point for event based monitoring. In the first part we saw how to teach Tornado to react to incoming events. This second part will explore how to test this configuration from both GUI and CLI, and put tornado under a little stress test. If you want to try it out for yourself, you can build Tornado from the Tornado GitHub repository.

Sending Events

There are multiple ways to send Events to Tornado. The most convenient one is for sure via the Graphical User Interface (GUI).

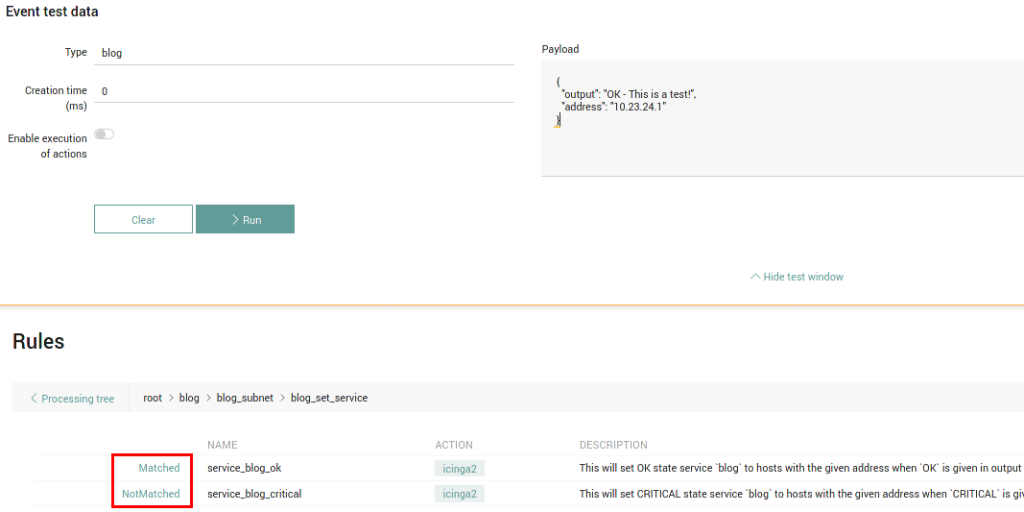

You can construct any event you desire in the Testing Panel and send it to Tornado. Behind the curtain it uses the RestAPI exposed by Tornado.

If you prefer a command line interface instead, the same API can be accessed through the tornado-send-event utility. It takes a path to a file containing our Event as input, and will give a detailed report about which paths in the processing trees were traversed by as JSON Output.

The third option consists of opening a TCP socket and streaming Events via JSONL protocol to Tornado. This is by far the most performant option, however it offers no feedback about anything that happens afterwards, and is as such the most suitable for all collectors.

Testing our Rules

Let’s create an event.json with the following content:

{

"type":"blog",

"created_ms":0,

"payload":{"output":"OK - This is a test!","address":"10.23.24.1"}

}

You can send the event to tornado using the command /usr/bin/tornado-send-event ./event.json. Depending on your current tornado configuration it will output something along the lines:

{

"event": {

"type": "blog",

"created_ms": 0,

"payload": {

"address": "10.23.24.1",

"output": "OK - This is a test!"

}

},

"result": {

"type": "Filter",

"name": "root",

"filter": {

"status": "Matched"

},

"nodes": [

{

"type": "Filter",

"name": "blog",

"filter": {

"status": "Matched"

},

"nodes": [

{

"type": "Filter",

"name": "blog_subnet",

"filter": {

"status": "Matched"

},

"nodes": [

{

"type": "Ruleset",

"name": "blog_set_service",

"rules": {

"rules": [

{

"name": "service_blog_ok",

"status": "Matched",

"actions": [

{

"id": "icinga2",

"payload": {

"icinga2_action_name": "process-check-result",

"icinga2_action_payload": {

"exit_status": "0",

"filter": "host.address==\"10.23.24.1\" && service.name==\"blog\"",

"plugin_output": "OK - This is a test!",

"type": "Service"

}

}

}

],

"message": null

},

{

"name": "service_blog_critical",

"status": "NotMatched",

"actions": [],

"message": null

}

],

"extracted_vars": {}

}

}

]

}

]

}

]

}

}

Here you can see which filters were traversed, and which rules matched.

Coming full circle

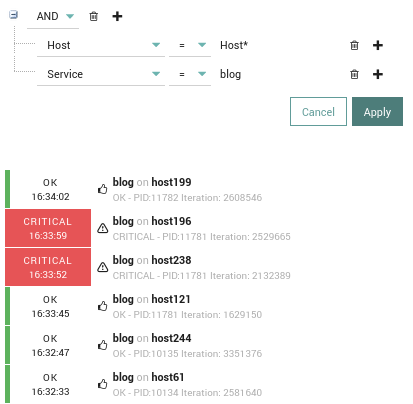

This works well when we send a single event to tornado, but how does it behave under load? If we remember the previous post, we created rules that match the following conditions:

- Event

Typeequalsblog IP Addressis in the range of our subnet10.23.24.0/24- Whenever the payload starts with the word

CRITICALa passive check result for the serviceblogon the host with the sameAddressshould be set - Whenever the payload starts with the word

OKthe same should happen with anOKstate

The attached random_event.pl does the following:

- Generate a random four letter event

Type: 26^4 possibilities - Generate a random number for the third octet of the

IP Addressbetween 20 and 30: 10 possibilities - Alternate between states CRITICAL and OK on each iteration

There is one possibility for matching blog and one possibility of matching our octet 24, which means we have an 1/(26^4 * 10) possibility of triggering either the OK or the CRITICAL rule, which is about one in 4.5 million generated events will trigger a passive check result.

There is an additional script setup_environment.sh which will inject 254 hosts in your existing director installation, such that your actions are not rejected by icinga2 for non-existing host/service combinations.

IMPORTANT: Evaluate adapting the scripts if you’re using the subnet 10.23.24.0/24 productively, as there will be generated critical events.

When running the random_event.pl script, ALL generated events are sent to tornado via a single TCP socket. If you wish to add additional stress, they can be run in parallel. Whenever the right conditions are met you can check either the tornado and icinga2 log files or look in the Event Overview in Icingaweb2.

Each instance of the script should generate a few million Events every minute, such that you should see first results pretty soon. Looking at the resource usage, you will see that each process generating events will take up an entire CPU, and generate events as fast as possible.

Thanks to our carefully chosen filter strategy (see part 1), and thanks to the efficiency of Tornado (big shout out to Rust!), you will note that Tornado itself will be relatively bored instead. Which is nice.

Resources

Rules, Setup Script and Stress Test: Download