As traffic to applications deployed on OpenShift grows, it’s essential to gain visibility into the flow of data entering your cluster. Monitoring this incoming traffic helps administrators maintain optimal performance, reduce security risks, and quickly resolve any emerging issues.

Enabling Logging

All traffic directed to an OpenShift Route is routed through a designated set of pods, typically one for each node, located in the openshift-ingress namespace. By logging the interactions of these pods, you can effectively monitor all incoming traffic.

Fortunately, these pods are managed by an operator known as the ingress operator. This makes it straightforward to enable by simply editing the Custom Resource Definition (CRD) of the default ingress controller:

oc edit -n openshift-ingress-operator IngressController defaultapiVersion: operator.openshift.io/v1

kind: IngressController

metadata:

name: default

namespace: openshift-ingress-operator

spec:

# ... Other specs above

logging:

access:

destination:

type: ContainerUpon finishing editing, the system will automatically detect the changes and deploy a new set of pods with the updated configuration. As soon as they receive a request, they will start logging it like:

2025-03-30T20:15:01.607643+00:00 node04 node04.myopenshiftcluster.lan haproxy[442164]: 10.63.63.63:57682 [30/Mar/2025:20:15:01.606] fe_sni~ fe_sni/<NOSRV> -1/-1/-1/-1/0 400 0 - - CR-- 6/3/0/0/0 0/0 "<BADREQ>"

2025-03-30T20:15:01.607806+00:00 node04 node04.myopenshiftcluster.lan haproxy[442164]: 10.63.63.63:57682 [30/Mar/2025:20:15:01.575] public_ssl be_sni/fe_sni 2/0/32 6281 -- 5/3/2/2/0 0/0

2025-03-30T20:15:04.603424+00:00 node04 node04.myopenshiftcluster.lan haproxy[442164]: 10.63.63.63:45836 [30/Mar/2025:20:15:04.601] public be_http:myservice:myservice/pod:myservice-6b9776cf9b-pl5jj:myservice::10.129.3.204:8080 0/0/0/1/1 200 220 - - --NI 5/1/0/0/0 0/0 "GET /v2/config/ HTTP/1.1"The log format closely resembles the standard HAProxy format, as the ingresses are indeed utilizing it under the hood.

Parsing the Logs

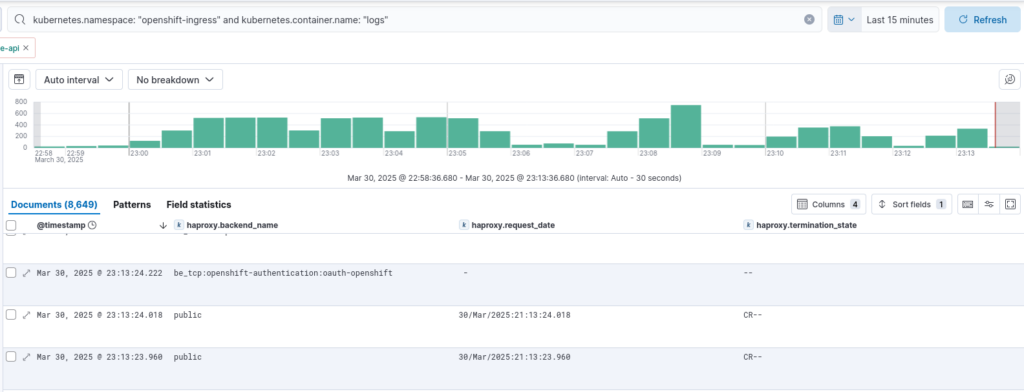

If you have an elastic agent installed in your OpenShift cluster, the stdout of any pod, including the ingress pod, will be collected and sent to Elasticsearch. If you haven’t installed it yet, I recommend checking out my previous article, which provides a detailed guide on how to set it up.

By default, all logs ingested by the Kubernetes integration are stored in the Elasticsearch indexes within the message field. However, this format can be cumbersome for conducting specific searches and setting rules.

To enhance the searchability of logs, we will need to use an ingest pipeline to instruct Elasticsearch to parse the contents of the message field more effectively. Fortunately, the parsing is already managed by the ingest pipeline provided by the HAProxy integration, so we can leverage that!

{

"description": "Automatic parsing of openshift ingress logs",

"version" : 1,

"processors": [

{

"pipeline": {

"description": "Kubernetes Openshift Container Logs (HaProxy)",

"name": "logs-haproxy.log-1.16.0",

"if": "ctx.kubernetes.namespace == 'openshift-ingress' && ctx.kubernetes.container.name == 'logs'"

}

}

],

"on_failure": [

{

"set": {

"field": "error.message",

"value": "{{ _ingest.on_failure_message }}",

"override": false

}

},

{

"set": {

"field": "error.pipeline",

"value": "{{ _ingest.on_failure_pipeline }}",

"override": false

}

},

{

"set": {

"field": "error.processor",

"value": "{{ _ingest.on_failure_processor_type }}",

"override": false

}

},

{

"set": {

"field": "error.tag",

"value": "{{ _ingest.on_failure_processor_tag }}",

"override": false

}

}

]

}Conclusion

Once implemented, all logs generated from the OpenShift ingresses will be automatically parsed, and all fields will be populated according to the ECS standard, in line with the haproxy integration. As a result, any pre-existing security rules based on this integration will function seamlessly.

If you have any questions or suggestions for improvement, please don’t hesitate to contact me!

These Solutions are Engineered by Humans

Did you find this article interesting? Does it match your skill set? Programming is at the heart of how we develop customized solutions. In fact, we’re currently hiring for roles just like this and others here at Würth Phoenix.