Introduction to Container Resource Management and What We Can Learn for Monitoring

Recently, I’ve been deeply involved in OpenShift monitoring tasks, including configuring Grafana dashboards and creating Prometheus alerts. During this time, I’ve focused on effectively monitoring container resources such as CPU and memory. Container orchestration platforms like Kubernetes and OpenShift require efficient resource utilization and precise resource management in order to optimize performance and ensure application stability.

In this article, I’ll detail how resource management works in modern Linux distributions through CPU regulation. I’ll explain the role of Linux namespaces and control groups in this process, and I’ll also provide a few tips on effectively monitoring and fine-tuning these resources to achieve optimal performance.

What is a Container?

Before delving deeper into the technical details of resource management, it’s crucial to understand the fundamental unit these techniques are designed to manage: the container. Containers have revolutionized software development and deployment by enabling applications to run in isolated and portable environments. This ensures that one container in a given environment cannot affect another, resulting in security, stability, and scalability.

But what exactly is a container, and how does it leverage Linux kernel features to provide this functionality? Let’s explore this foundational concept.

A container is built on several new features of the Linux kernel, with the two main components being namespaces and cgroups. Let’s look at namespaces first.

Linux namespaces: The foundation of containers

Namespaces are responsible for isolation, and ensure that access to the rest of the system is strictly limited from within the container while making it appear as if the container is operating in its own standalone environment.

Linux namespaces come in various types, including but not limited to:

- PID Namespace: Isolation of process identifiers

- Net Namespace: Isolation of network resources (e.g., IP addresses and ports)

- Mount Namespace: Isolation of filesystems, allowing a container to have its own filesystem

- User Namespace: Isolation of users and groups, helping to restrict privileges within individual containers

Creating a Linux namespace is quite straightforward and can be done using the `unshare` utility to create a separate process. Let’s look at an example.

Open a terminal in a Linux system and type this command:

unshare --fork --pid --mount-proc bash

The above command creates a separate process and assigns a bash shell to it. Then when I run the `ps` command, we’ll see that only two processes are running: `bash` and `ps`:

root@debian:/# ps aux

...

root ... S+ 10:12 0:00 sudo unshare --fork --pid --mount-proc bash

root ... Ss 10:12 0:00 sudo unshare --fork --pid --mount-proc bash

root ... S 10:12 0:00 unshare --fork --pid --mount-proc bash

...

If I open another terminal and check `ps` there, we’ll see the `unshare` process running. This is similar to listing containers using the `docker ps` command:

root@debian:/# ps aux

...

root ... S+ 10:12 0:00 sudo unshare --fork --pid --mount-proc bash

root ... Ss 10:12 0:00 sudo unshare --fork --pid --mount-proc bash

root ... S 10:12 0:00 unshare --fork --pid --mount-proc bash

...To exit the namespace, use the `exit` command.

Managing resources in Linux and understanding the concept of restricting allocation within the container world

How can we limit the resources of individual namespaces so that they don’t consume the resources of another namespace or the host system? For this, unsurprisingly, another Linux kernel feature called Linux Control Groups comes into play. While namespaces isolate resources, control groups restrict and regulate their usage.

Control Groups, or cgroups is a fundamental tool for resource management in modern Linux systems. Cgroups are a Linux kernel feature that takes care of resource allocation (CPU time, memory, network bandwidth, I/O), prioritization and accounting. In addition to being a Linux primitive, cgroups are also a building block of containers, so without cgroups there would be no containers. Cgroups allow the regulation of the following resources:

- CPU: Access limits to processor cores

- Memory: Maximum memory usage limits

- Disk I/O: Restrictions on disk operations

- Network: Quotas for network traffic

The systemd and cgroup2 hierarchy

Each process and service is placed in its own cgroup, making them easier to manage and regulate.

- Hierarchy basics and structure:

- Root group (-.slice): This is the root of the cgroup2 hierarchy and includes all processes by default. All subordinate groups originate from here.

- Segmented groups (scope and slice):

- Slices: A slice is a logical grouping of services or processes. For example, system.slice is the default group for most system processes, while user.slice is for user processes.

- Scopes: A scope is a lower-level group created dynamically, for instance, when an application is launched with systemd-run. These groups are short-lived and focus on a specific task (scope). I’ll use this in my example below.

- Hierarchical structure: Each slice and scope in cgroup2 can be further divided into subcategories (subgroups). For instance, you can create a web.slice group for a web service and further divide it into subgroups for its components.

- Resource management: Using systemd, resource limits like CPU, memory, and I/O can be set for groups. These limits are inherited by subgroups lower in the hierarchy but can also be overridden.

This hierarchy resides in /sys/fs/cgroup/, which is the cgroup filesystem (cgroupfs). There you’ll find subtrees for all Linux processes.

Imagine a Kubernetes node with two containers running on it. The systemd and cgroup2 hierarchy would look like this:

/sys/fs/cgroup/

└── kubepods.slice/

├── kubepods-besteffort.slice/

│ └── ...

├── kubepods-guaranteed.slice/

│ └── ...

└── kubepods-burstable.slice/

└── kubepods-burstable-pod<SOME_ID>.slice/

├── crio-<CONTAINER_ONE_ID>.scope/

│ ├── cpu.weight

│ ├── cpu.max

│ ├── memory.min

│ └── memory.max

└── crio-<CONTAINER_TWO_ID>.scope/

├── cpu.weight

├── cpu.max

├── memory.min

└── memory.maxAll Kubernetes groups are located in the kubepods.slice/ subdirectory, which contains additional subdirectories: kubepods-besteffort.slice/, kubepods-burstable.slice/, and kubepods-guaranteed.slice/, corresponding to each QoS (Quality of Service) type. Below these directories, you’ll find directories for each Pod, and within them, additional directories for each container.

At each level, there are files like “cpu.weight” or “cpu.max” that determine how much of a given resource – e.g., CPU – this group can use. For clarity, the example above shows these files only at the deepest level. In this hierarchy, you can set separate CPU and memory limits for the containers, while both will inherit rules from their parent slice as part of the larger group.

Let’s take a look at some of the benefits of this hierarchical structure:

- Flexible regulation: Easily define specific limits and priorities

- Resource isolation: Each process only accesses resources within its own group

- Monitorability: The system is easy to track and monitor, as cgroup2 provides detailed information about every process and group

- Management tools: Use the

systemctlandsystemd-runcommands to manage and monitor groups as well as configure resource limits

Now let’s take a closer look at how CPU requests and limits are configured at the cgroup2 level. It’s important to understand what these settings actually mean when applied to a container in Kubernetes or OpenShift, and what processes occur under the hood. This knowledge helps us grasp how our configurations affect the container’s CPU access and the overall behavior of the system.

The CPU limit and CPU request are mapped to the cgroup2 subsystem using two different mechanisms:

cpu.limit → cpu.max: Controls the absolute upper limit of CPU usagecpu.request → cpu.weight: Determines the CPU priority or share in a shared environment

CPU Request

cpu.request defines the relative priority of a container compared to others when the CPU is overcommitted.

Kubernetes sets this value in the cpu.weight file, which assigns CPU priority on a linear scale:

- The default priority for CPU request is 1024

To ensure compatibility, this default value was kept from the first version of cgroup (v1). In cgroup2, it is dynamically recalculated.

- The weight can be calculated using the formula:

cpu.weight = request × 1024 / 1000(In practice, the value may be slightly adjusted due to rounding.)

Example:

- If

cpu.requestis 100m (0.1 CPU):cpu.weight = 100 × 1024 / 1000 = 102

- Kubernetes sets:

echo "102" > /sys/fs/cgroup/<container>/cpu.weight

CPU Limit

cpu.limit specifies the maximum amount of CPU time a container can use:

- Kubernetes sets the

cpu.maxfile in the cgroup2 group:cpu.max = <quota> <period>

- The default period for Kubernetes (and the Linux kernel) is 100,000 µs (100 ms), so the quota is calculated as:

quota = limit × period

Example:

- If

cpu.limitis 500m (0.5 CPU):quota = 0.5 × 100000 = 50000 µs

- Kubernetes sets:

echo "50000 100000" > /sys/fs/cgroup/<container>/cpu.max

The two numbers in the cpu.max file (quota and period) define a ratio rather than an absolute value. The system determines how much CPU time the group can use based on the quota-to-period ratio relative to the total time available.

Important note: The ratio is independent of the number of physical CPUs! The allowed CPU usage applies to all available CPU cores. For example, on a 4-core system:

cpu.max = 100000 100000: The group uses 1 CPU core’s worth of time, but this can be spread across any corecpu.max = 200000 100000: The group uses up to 2 CPU core’s worth of capacity, distributed across the system’s cores

Summary

| Kubernetes value | cgroup2 file | Example value |

| CPU Limit | cpu.max | 500m → 50000 / 100000 (0.5 CPU limit) |

| CPU Request | cpu.weight | 100m → 0.1 × 1024 = 102 (0.1 CPU request) |

What happens if only “request” or only “limit” is specified?

- If only “request” is specified:

- Kubernetes sets only the

cpu.weightfile. The container does not have an absolute limit, meaning it shares CPU resources based on its relative priority.

- Kubernetes sets only the

- If only “limit” is specified:

- Kubernetes sets only the

cpu.maxfile but does not explicitly define priority (weight). The defaultcpu.weightvalue (cgroup1=1024, cgroup2=102 ) is applied.

- Kubernetes sets only the

To summarize the above points, here are a few important notes:

- Requests determine priority:

- The

requestvalue defines how CPU resources are distributed among containers during contention, based on their relative priority

- The

- Limits impose a ceiling:

- Even if a container has high priority due to its request, the limit ensures that it cannot use more CPU than the defined maximum

- Scheduling considerations:

- The scheduler uses the

requestvalues to ensure that the node has enough CPU capacity to meet the minimum guaranteed requirements for each container (POD)

- The scheduler uses the

This combination ensures that containers share CPU resources in a balanced and efficient manner within the Kubernetes cluster.

Demo Time

Instead of focusing solely on theory, let’s explore a straightforward practical example. For this demonstration, I’ll be using a 4-core virtual machine running Debian Linux, with minimal resource usage from background system processes.

In this scenario, I’ll simulate a high-load situation to illustrate how performance metrics respond and, importantly, how to interpret these values effectively. For simplicity, I won’t be working with Kubernetes and containers right now, but the principles remain the same at the cgroup level.

Requirements

Install stress-ng for simulating CPU load:

- For Debian/Ubuntu:

sudo apt install stress-ng - For CentOS/RedHat:

sudo dnf install stress-ng

Simulate CPU load

First, start stress-ng to simulate 90% CPU usage on all 4 cores. Use the systemd-run command to automatically add the process to a cgroup2.

systemd-run --scope stress-ng -c 0 -l 90With the systemd-run tool the stress-ng command has been added to the cgroups2 system and was automatically passed some default settings, including the CPU priority (cpu.weight), which is important in our case. This can easily be viewed in the cgroups filesystem. Notice that the default priority value in cgroup2 is 100.

Steps to verify:

1. Find the PID of the stress-ng process and locate it in the filesystem:

ps a | grep -e stress-ng

Example output:

17109 pts/2 SL+ 0:00 /usr/bin/stress-ng -c 0 -l 90

17110 pts/2 R+ 13:09 /usr/bin/stress-ng -c 0 -l 902. Locate the PID in the cgroup hierarchy:

grep -rs "17109" /sys/fs/cgroup/

Example output:

/sys/fs/cgroup/system.slice/run-rf85508f49cc84ee5b12f2fe92c8c9015.scope/cgroup.procs:17109

/sys/fs/cgroup/system.slice/run-rf85508f49cc84ee5b12f2fe92c8c9015.scope/cgroup.threads:17109 3. Check the default CPU weight:

cat /sys/fs/cgroup/system.slice/run-rf85508f49cc84ee5b12f2fe92c8c9015.scope/cpu.weight

Example output: 100

Now, let’s run another `stress-ng` process to perform exact matrix calculations and measure the time it takes to complete, using the default settings:

systemd-run --scope stress-ng --matrix 4 --matrix-ops 200000 --times

Example output:

Running scope as unit: run-rc720219404504a3cbd6266b12dfd27ca.scope

stress-ng: info: [1614893] defaulting to a 86400 second (1 day, 0.00 secs) run per stressor

stress-ng: info: [1614893] dispatching hogs: 4 matrix

stress-ng: info: [1614893] for a 42.54s run time:

stress-ng: info: [1614893] 170.18s available CPU time

stress-ng: info: [1614893] 85.12s user time ( 50.02%)

stress-ng: info: [1614893] 0.02s system time ( 0.01%)

stress-ng: info: [1614893] 85.14s total time ( 50.03%)

stress-ng: info: [1614893] load average: 4.65 1.56 1.32

stress-ng: info: [1614893] successful run completed in 42.54sExplanation:

--matrix 4: runs matrix operations on 4 threads--matrix-ops 200000: each thread performs 200,000 matrix operations--times: provides detailed timing information (real time, CPU time, etc.)

Since no custom CPU weight was specified, the task ran with the default weight (cpu.weight=100), taking 42.54 seconds to complete.

Testing with custom CPU priority

The CPU weight can be adjusted between 2 and 10000. Let’s assign a weight of 300 to the application (--property CPUWeight=300) and measure the difference. Recall that with the default cpu.weight=100, the script took 42.54 seconds. This time, we expect it to complete faster due to the significantly higher weight:

systemd-run --scope --property CPUWeight=300 stress-ng --matrix 4 --matrix-ops 200000 --times

Example output:

Running scope as unit: run-r09dae681eec34bc7aa2ada4581da241c.scope

stress-ng: info: [1614935] defaulting to a 86400 second (1 day, 0.00 secs) run per stressor

stress-ng: info: [1614935] dispatching hogs: 4 matrix

stress-ng: info: [1614935] for a 28.74s run time:

stress-ng: info: [1614935] 114.98s available CPU time

stress-ng: info: [1614935] 84.85s user time ( 73.80%)

stress-ng: info: [1614935] 0.02s system time ( 0.02%)

stress-ng: info: [1614935] 84.87s total time ( 73.81%)

stress-ng: info: [1614935] load average: 5.43 3.35 2.13

stress-ng: info: [1614935] successful run completed in 28.74sObservations:

- With the default

cpu.weight=100, the script completed in 42.54 seconds - With

cpu.weight=300, the same script completed in 28.74 seconds, achieving a 30% performance improvement due to the increased CPU priority

Now let’s look at a simple example of CPU Quota (cpu.max) to demonstrate CPU throttling.

Step 1: Run the stress-ng command

Use the familiar command but set it to utilize 100% CPU and configure the CPU quota to 50% (50000 100000 in cpu.max):

systemd-run --scope --property CPUQuota=50% stress-ng -c 0 -l 100

Step 2: Observe the CPU throttling in htop

The htop output for CPU usage will look something like this:

0 [|||||||||| ] 12.5% 1 [|||||||||| ] 12.6% 2 [|||||||||| ] 12.5% 3 [|||||||||| ] 12.8%

Explanation:

- CPU throttling is evident as each core’s usage is restricted

- Since there are 4 cores, the 50% quota is evenly divided among them, resulting in approximately 12.5% usage per core

Step 3: Adjust the CPU quota to allow 50% usage per core

To allow each core to utilize 50% of its capacity, set a 200% CPU quota:

systemd-run --scope --property CPUQuota=200% stress-ng -c 0 -l 100

If your system has 8 cores and you want 50% usage per core, you would need to set the CPU quota to 400%. For example:

systemd-run --scope --property CPUQuota=400% stress-ng -c 0 -l 100

This configuration ensures proportional throttling based on the total number of cores and the specified quota.

A few suggestions for effective monitoring and resource management

Monitoring Tools:

- Utilize tools like

kubectl top,- kubectl top pod

--namespace=<namespace>

- kubectl top pod

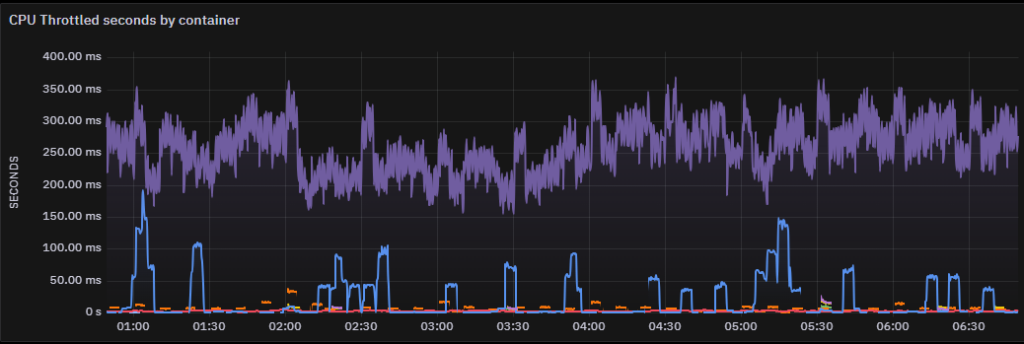

- Prometheus, and Grafana to monitor resource usage, visualize metrics, and receive alerts for potential issues:

sum(rate(container_cpu_cfs_throttled_seconds_total{node=~"^$Node$",namespace="$Namespace",container=~"$Container",pod=~"$Pod",container!="",service="kubelet",image!=""}[5m])) by (container) > 0

- Resource Requests and Limits: Properly configure

cpu.requestandcpu.limitto ensure optimal resource allocation. Leverage Kubernetes Horizontal Pod Autoscaler (HPA) or Vertical Pod Autoscaler (VPA) to dynamically adjust resource requirements:

resources:

requests:

cpu: "100m"

limits:

cpu: "500m"- Resource Quotas: Define resource quotas at the namespace level to prevent over-consumption and ensure fair resource distribution across teams and applications:

apiVersion: v1

kind: ResourceQuota

metadata:

name: cpu-quota

namespace: my-namespace

spec:

hard:

requests.cpu: "4"

limits.cpu: "8"- Alerting: Use Prometheus alerts:

groups:

- name: CPU Alerts

rules:

- alert: HighCPUUsage

expr: rate(container_cpu_usage_seconds_total[5m]) > 0.8

for: 2m

labels:

severity: warning

annotations:

summary: "High CPU usage detected"- Profile Applications: Conduct application profiling to analyze CPU usage, which helps fine-tune configurations for enhanced performance:

- For Go applications: pprof

- For Java applications: VisualVM, JProfiler

- Run Stress Tests Regularly: Use tools like

stress-ngto simulate high loads and identify potential bottlenecks or inefficiencies in applications and infrastructure:stress-ng --cpu 4 --vm 2 --vm-bytes 512M --timeout 10s

- Analyze Logs and Metrics: Regularly review logs, metrics, and performance data to detect anomalies and effectively plan capacity:

- kubectl logs <pod-name>

Key Takeaway

These strategies help you efficiently manage resources in Kubernetes or OpenShift environments, ensuring the performance, stability, and scalability of your applications. Regular monitoring and proactive optimization are crucial for maintaining a reliable, containerized ecosystem.

Useful links:

grafana.com: optimize resource usage

These Solutions are Engineered by Humans

Did you find this article interesting? Are you an “under the hood” kind of person? We’re really big on automation and we’re always looking for people in a similar vein to fill roles like this one as well as other roles here at Würth Phoenix.