Offloading Data Enrichment to Satellite Machines with Logstash

In high-demand environments, efficiency isn’t just an advantage – it’s essential. One of the biggest hurdles we encountered was the overwhelming strain placed on NetEye’s (Elastic) master nodes during the data enrichment process. As data volumes skyrocket, so do the complexity and the need for a smarter approach. Enter our game-changing solution: offloading data enrichment to Logstash satellite nodes, a strategic move that has revolutionized our performance, streamlined operations, and opened the door to new levels of scalability.

The Problem: A Critical Bottleneck

Traditionally, data is collected by Elastic Agents and – by proxying the data flow through the Satellite – sent directly to master nodes, where the enrichment process adds necessary context before indexing the data into Elasticsearch. The “master” nodes must handle raw data, apply enrichment, and pass that on to Elasticsearch ingest nodes, adding complexity and load to the core infrastructure.

On top of that, sending raw, unfiltered data means more network traffic, increased storage needs, and higher resource consumption across the board. It also means that irrelevant and unwanted events reach the ingest nodes, further increasing processing requirements and placing undue stress on Elasticsearch nodes.

The Solution: Revolutionizing Enrichment with Satellite Nodes

To combat this, we shifted the enrichment process to satellite machines distributed throughout the infrastructure. Here’s what makes this approach so effective:

Precision Control and Decentralized Complexity

By pushing enrichment to the satellite nodes, the complexity is distributed. Instead of overloading the master nodes with enrichment tasks, satellite nodes handle this, leaving the master nodes to focus on critical, high-level management tasks. We can easily drop unwanted events and reduce the number of logs Elastic needs to process.

Empowered Efficiency and Reduced Load on Elasticsearch

Data arrives at the ingest nodes already enriched and filtered, significantly reducing the processing burden. This translates into faster ingestion times, lower CPU and memory consumption, and better overall performance.

Lightning-Fast Ingestion and Optimized Bandwidth Usage

Since data arrives already enriched and filtered, the ingest nodes can process it at lightning speed, reducing the load on Elasticsearch and optimizing resource utilization. This not only reduces the volume of data sent over the network, but also cuts down the bandwidth usage – important in remote or bandwidth-constrained environments.

Architectural Overview

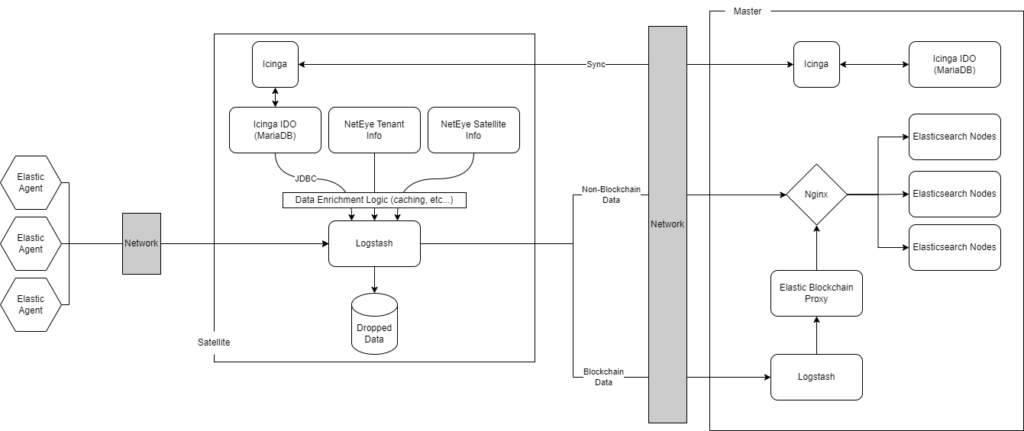

Below is a simple architectural diagram that illustrates this setup:

In this architecture:

- Satellites collect, enrich, and filter data locally using Logstash.

- Only the enriched data is sent to the master nodes.

- The master nodes then forward this pre-enriched data to Elasticsearch ingest nodes, significantly reducing the ingest load.

More in detail, we performed some logical shifts to the enrichment process by using a Logstash JDBC filter to query the Icinga IDO of the Satellite (which is kept fully in sync with Icinga on the Master), as well as provide more enrichment (like Local Domain, Zone, Tenant, etc…) by using the information that we already have on the Satellite configurations. Then the Satellite performs parsing and filtering (including data dropping) and forwards the data to the Master nodes.

The Results

The impact of this architectural change has been tremendous:

- Performance Boost: Master and ingest nodes now operate more efficiently, handling only enriched data, leading to improved performance across the platform

- Resource Savings: Offloading enrichment freed up CPU and memory on master nodes, making room for other critical processes

- Reduced Event Volume: By dropping irrelevant events on satellite nodes, we cut down on the number of events that hit Elasticsearch, leading to reduced storage needs and faster searches

- Better Bandwidth Efficiency: With less data traversing the network, bandwidth usage dropped, allowing for more scalable and resilient operations, especially in distributed environments

Conclusion

Offloading data enrichment to satellite machines with Logstash not only streamlined our architecture but also brought significant improvements in performance, resource utilization, and scalability. This approach has allowed us to handle higher volumes of enriched data more effectively, making our NetEye platform more robust and future-proof.

These Solutions are Engineered by Humans

Did you find this article interesting? Does it match your skill set? Our customers often present us with problems that need customized solutions. In fact, we’re currently hiring for roles just like this and others here at Würth Phoenix.