In the enormous world of Log Collection, quite often customers need to collect logs from various systems in remote locations, like from an office in another country.

For Icinga we know that the latest NetEye 4.20 release fully supports distributed monitoring, but what about the Log Manager and SIEM modules?

Is it possible to use the NetEye Satellite feature to collect logs within a distributed NetEye Architecture? In other words, can logs pass directly from satellite to satellite without having to go through the master?

Now I’ll tell you how we dealt with this situation for many customers over the last year.

Infrastructure

Let’s assume the only two items that we need to retrieve to reach our goal are:

- Logstash: collect logs from an external Beats Agent

- Filebeat: take advantage of the modules provided by Elastic and collect logs from various appliances (see Beats Modules)

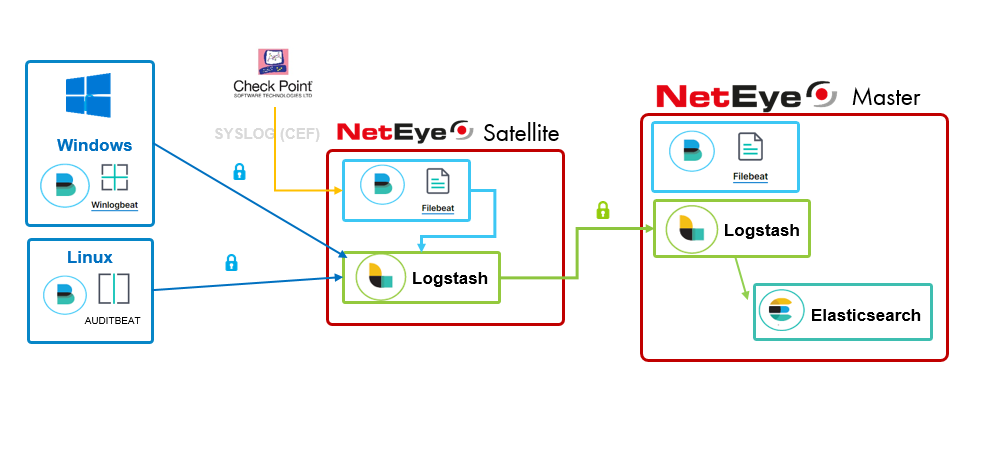

The image below gives an example of the flow that logs follow from a DMZ where the NetEye Satellite is deployed to the NetEye Master on Production. Only a single path of communication goes from DMZ to Production using TLS.

Logstash on NetEye Satellite

All the Beats Agents communicate with a Logstash instance installed on the NetEye satellite, which forwards all the logs via TSL to the Logstash instance installed on the NetEye Master (this is possible by taking advantage of Logstash HTTP Output plugins as explained in the Elastic Logstash Documentation). The rest of the flow is the same: logs are parsed and filtered, and finally sent to Elasticsearch Database.

The first step is creating the new input on the Logstash NetEye satellite to reach events from client and server hosts. We keep the same input port like the master for simplicity (TCP 5044), and we need to generate a server certificate for the new input based on the Satellite’s DNS name, which we push to all our clients at a later time.

An example configuration for the input is the following:

input {

beats {

port => 5044

client_inactivity_timeout => 300

ssl => true

ssl_certificate_authorities => "/usr/share/pki/ca-trust-source/anchors/root-ca.crt"

ssl_certificate => "/etc/logstash/certs/neteye-FQDN_SAT.crt.pem"

ssl_key => "/etc/logstash/certs/private/neteye-FQDN_SAT.key"

ssl_verify_mode => "force_peer"

ssl_peer_metadata => true

}

}

We don’t need to add any particular filters on the satellite but, in my experience, I’ve found it useful to add a field on my logs to identify the satellite from which each log comes.

Here’s my filter block:

filter {

## Add agent name for satellite

ruby {

init => "

require 'socket'

@@hostname = Socket.gethostbyname(Socket.gethostname).first

"

code => "event.set('[@metadata][satellite]', @@hostname)"

}

mutate {

add_tag => ['%{[@metadata][satellite]}']

add_field => { "[satellite][hostname]" => "%{[@metadata][satellite]}" }

}

if [@metadata] {

mutate {

rename => [ "[@metadata]", "[metadata]" ]

}

}

}Pay Attention! The @metadata fields that will be used by Logstash will not be forwarded to the other Logstash instance, so you need to add a little workaround to avoid losing data, for example, on the Beats Pipeline (stored on the field @metadata.pipeline). See the documentation for details.

Finally, the logs will be sent to Logstash Master with the HTTP Output configuration. To do this we need to create and exchange certificates from Master to Satellite logstash instances (a client certificate generated with the NetEye Master CA):

output {

http {

format => "json_batch"

http_method => "post"

client_key => ["/etc/logstash/certs/private/neteye-logstash.key.pem"]

client_cert => ["/etc/logstash/certs/neteye-logstash.crt.pem"]

cacert => ["/usr/share/pki/ca-trust-source/anchors/master-root-ca.crt"]

url => "https://neteye-master-host:5000"

}

}Keep this in mind! You need to open the firewall port (TCP 5000) on the NetEye Master so that it’s reachable from the Satellite, and you need to add a new input HTTP config on the Logstash Master instance like this:

input {

http {

port => 5000

ssl => true

ssl_certificate_authorities => "/neteye/shared/logstash/conf/certs/root-ca.crt"

ssl_certificate => "/neteye/shared/logstash/conf/certs/logstash-server.crt.pem"

ssl_key => "/neteye/shared/logstash/conf/certs/private/logstash-server.key"

ssl_verify_mode => "force_peer"

remote_host_target_field => "[satellite][host]"

}

}Note: In this case, we already have a valid server certificate, the same one used by the Input Beats configuration that’s always present on the NetEye Master.

Filebeat on NetEye Satellite

To take advantage of Filebeat Modules, which we can use on the NetEye Master, we can also add the Filebeat agent to the NetEye Satellite (directly from either NetEye Repos or the Elastic Repo).

The Filebeat agent on the satellite will now be configured in the same way that we configure the Beats instance on the client and server hosts: pointing to the satellite’s FQDN (instead of the master’s).

An example configuration for the output is:

#----------------------------- Logstash output --------------------------------

output.logstash:

# The Logstash hosts

hosts: ["sat-fqdn:5044"]

# Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

ssl.certificate_authorities: ["/usr/share/pki/ca-trust-source/anchors/root-ca.crt"]

# Certificate for SSL client authentication

ssl.certificate: "/etc/logstash/certs/ls-filebeat.crt.pem"

# Client Certificate Key

ssl.key: "/etc/logstash/certs/ls-filebeat.key.pem"

Le jeux sont fait!

Okay, now we can send all logs from any remote agent to our NetEye Master passing through the NetEye Satellite and avoiding security issues related to exposing the NetEye Master to all the remote Zones in our architecture.

We have the information about the satellite thanks to its own custom filter, and we preserve all the fields from Beats Agents.

All these configurations are carried out as part of consulting right now but, who knows, perhaps in the not-too-distant future our developers will manage to integrate this into NetEye itself.