Building and Stress Testing Multitenancy NetEye-NTOP

Introduction

The nTop application is one of NetEye’s integrated solutions for network traffic monitoring. In order to test its scalability, we decided to build a test bench where we could simulate a customer who needs to monitor a large number of devices (i.e., 1-2K routers) and wants to group this information at the nTop level (i.e., visualized as a single router belonging to a provider, area, etc.) In order to reach this goal, we used:

- Docker and nflow-generator to provide scalability

- nTopng/nProbe multiple instance services to provide segmentation

Read on to see our results.

Design

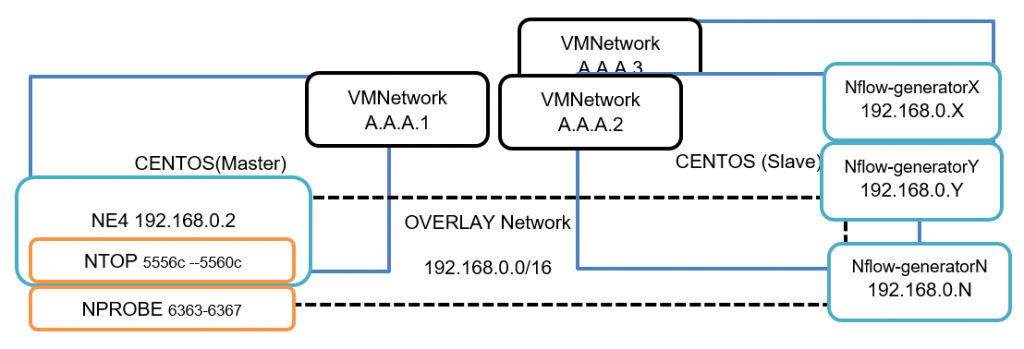

Our scenario consists of several devices (docker containers) that send data to a central ntopng-Neteye docker. Traffic between containers is isolated within a dedicated overlay network. nTop/nProbe then segments this data into subsets using multiple instances of the nTopng/nProbe services. An overview of the architecture is shown here:

Solution

The architecture designed for this PoC is based on 3 VMs inside a vCenter. In each of these 3 VMs we install Docker and build a master-slave layout. In the master we run a dockerized Neteye while in the 2 slaves we run docker containers that simulate routers sending nflow traffic (using an open source tool nflow-generator):

- (Master) CentOS

- Docker(NetEye)

- nTop

- nProbe

- Overlay Network

- (Slave1) CentOS

- Docker (nflow-generator)

- Overlay Network

- (Slave2) CentOS

- Docker(nflow-generator)

- Overlay Network

In the master node we define the swarm, create the network neteye_net and run NetEye docker with this network (incuding port forwarding for remote access)

[Master] docker swarm init [Master] docker network create -d overlay --subnet=192.168.0.0/16 --attachable neteye_net [Master] docker run --rm -d --network neteye_net --ip 192.168.0.2 --name neteye -p 8080:443 -p 2222:22 --privileged docker-si.wuerth-phoenix.com/neteye:4.18-build

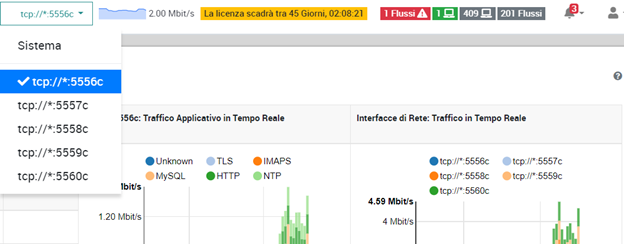

In order to have the nflow traffic properly segmented we decided to add collector interfaces to the NTOP service. In the configuration located at /neteye/shared/ntopng/conf/ntopmg/conf we add the listening collector interfaces:

- -i=tcp://*:5556c

- -i=tcp://*:5557c

- -i=tcp://*:5558c

- -i=tcp://*:5559c

- -i=tcp://*:5560c

Then we split the nProbe service and configure it into multiple services for forwarding the traffic to the nTop port collectors:

- 6363 forwards to 5556

- 6364 forwards to 5557

- 6365 forwards to 5558

- 6366 forwards to 5559

- 6367 forwards to 5560

Configurations are created inside the folder /neteye/shared/nprobe/conf/ (e.g., nprobe-6363.conf), and services can be managed via systemctl ntoprobe@<PORT>. Once we’ve properly configured nTopng and nProbe, we then configure the dockers with nFlow generators inside slave nodes.

The next step is to attach the slave VMs to the swarm:

[Slave] docker swarm join --token <TOKEN> <MASTER-IP>:2377

Then we connect the containers with the nflow-generator to the network:

[Slave] docker run -dit --network neteye_net --ip 192.168.0.101 --name slave1 networkstatic/nflow-generator -t 192.168.0.2 -p 6363

In order to speed up the creation of N docker containers, we created a simple ansible and bash script for automation. Here’s the bash script:

#!/usr/bin/bash

docker_name="alpine"

while getopts d:n:t:p:r: flag

do

case "${flag}" in

d) docker=${OPTARG};;

n) docker_name=${OPTARG};;

t) destination=${OPTARG};;

p) port=${OPTARG};;

r) remove=${OPTARG};;

esac

done

re='^[0-9]+$'

if [ -z "$docker" ] || [ "$docker" -le 0 ]

then

docker=1

fi

if ! [[ $docker =~ ^-?[0-9]+$ ]]

then

docker=1

fi

echo "Docker(s): $docker";

echo "Destination: $destination";

echo "Port: $port";

if [ "$remove" = "yes" ]

then

old_docker=`docker container ls -a --filter "name=salve*" --format '{{.Names}}' | xargs`

if ! [ -z "${old_docker}" ]

then

docker container stop $old_docker

docker container rm $old_docker

fi

fi

#create N docker

for (( c=1; c<=$docker; c++ ))

do

d_name=$docker_name$c

echo "Created $d_name times"

docker run -dit --network neteye_net --name $d_name networkstatic/nflow-generator -t $destination -p $port &

done

wait

echo "All dockers created"

Conclusion

At the end of this PoC, we proved that nTop can manage traffic from about 2000 docker containers (routers) without problems. The nFlow traffic was sent this way:

- 300 to nProbe 6363

- 200 to nProbe 6364

- 200 to nProbe 6365

- 200 to nProbe 6366

- 1010 to nProbe 6367

The architecture deployed for this PoC using nTop/nProbe provides the desired level of segmentation. So the PoC demonstrates that nTop can easily manage data from several devices (our test was with 2K), and traffic can be easily segmented using collector interfaces as you can see from this image: