With the rapidly advancing digitalization of the economy, the availability of IT is becoming business-critical. Unplanned downtime is no longer acceptable, 24/7 operation is indispensable. For administrators, this means that they have to identify incidents before they lead to damaging behaviors in business processes. AIOps promises support in this direction.

The digital transformation or our society is changing the way companies do business. New products and services require continuously functioning IT; business analytics and data are the indispensable backbone of today’s economy. Although during the industrialization of the 19th century the credo was “The wheels must always be turning”, today it is analogously true that IT must be both available and at high performance. Unplanned downtime is not acceptable in today’s world. The operational availability of services from a company’s own data center, from the cloud and other sources must be guaranteed. To make it simple: an alarm that only reaches administrators when users are already storming the helpdesk is worthless. Incoming problems must be detected and eliminated before they can affect user productivity. Artificial intelligence (AI) in monitoring can help administrators do this. The keyword here is “Artificial Intelligence for IT Operations”, or AIOps for short. This term was coined and defined by the market research company Gartner: “AIOps platforms use large amounts of data, modern machine learning and other advanced analytical technologies to directly and indirectly improve IT operations (monitoring, automation and service desks) with proactive, personal and dynamic insights.

Gartner divides AIOps into five consecutive levels of varying complexity: Getting rid of false alarms, improving current status, reducing potential impact, minimizing downtime, and generally improving service management. AI can play a role in all of them. AIOps is based on one hand on the fact that machine learning (ML) is used to detect patterns in the monitoring data that indicate unusual incidents or conditions. On the other hand, AIOps includes AI systems that make decisions based on the information obtained. There are two main approaches to machine learning: univariate and multivariate. Univariate analysis evaluates only one data series, for example the load of a processor. It requires significantly less computing power than multivariate analysis, but even so can deliver meaningful results. Peak loads and their temporal occurrence are examples of this. However, their potential recommendations are still too vague to be able to derive decisions, and a lot of experience is necessary to interpret the information. The problem is similar to that of classical monitoring: If one assumes an average value that is assumed to be normal, then information is lost, for example, due to set time intervals. Peaks may disappear and thus problems might remain undetected.

This does not occur with multivariate analyses, which comprise several metrics, such as the peak loads of different servers in connection with log events. For example, loads can be broken down into individual workloads. Does a batch job generate cyclical load peaks, or is an attack responsible for them? By linking different metrics, the behavior measured from monitoring can be better interpreted and used as a basis for decision-making. However, it should be noted that multivariate analyses are not automatically better than univariate ones. The analysis of multiple metrics requires many resources and a lot of time. In practice, multivariate analyses complement univariate analyses when specific questions arise that require more detailed clarification.

More Meaning through More Metrics

In practice, a situation may look like this:

Here the utilization of a processor increases linearly, as shown by the blue curve. The curve below it shows the increase in CPU load compared to the previous week.

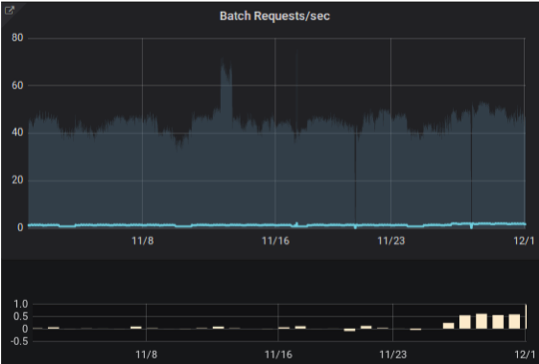

The batch requests, however, do not show a comparable increase, and so they are excluded as an underlying cause.

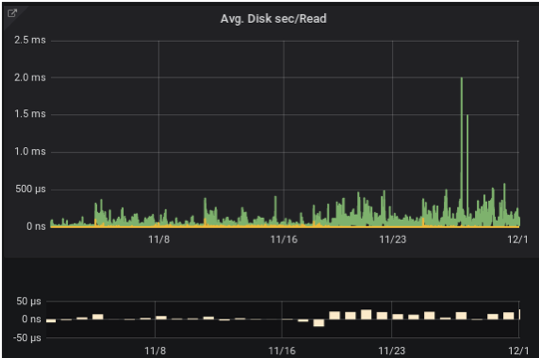

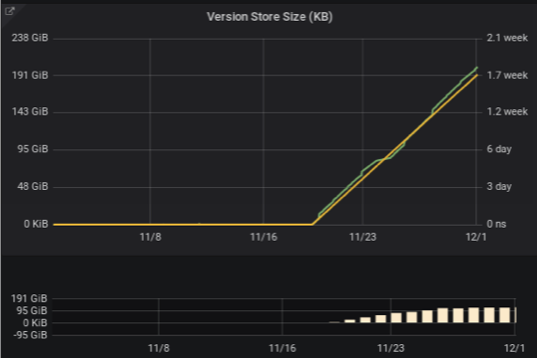

A joint analysis with hard disk latency, storage size, the version and the longest running transaction show a more accurate picture and explain the processor load: a strict look at the database and the corresponding configuration could be useful here.

AI is able to select trends like this from the monitoring data and present them to administrators. A manual search for the source of the error thus becomes much easier and faster. In addition, AI algorithms can search for patterns in the actions of the administrators and thus actively propose a proven solution for similar situations. Or even become active itself in individual cases.

SIEM Also Benefits

These possibilities do not just apply to IT operations. In IT security, this approach can help analyze monitoring data using AI as well. This is because in the area of Security Information & Event Management (SIEM), very large volumes of data from different sources must also be aggregated and analyzed, and thus all available monitoring data should be considered in order to detect anomalies relevant to security and data protection. However, the only challenge here is to filter large amounts of data and make it available to administrators. It is also not currently possible to correctly identify so-called complex events as security incidents. In the case of complex events, the combination of individual events that are not critical in and of themselves leads to potential problems. The respective events do not necessarily have to be in an immediately recognizable relationship to each other. Without the use of machine learning and artificial intelligence, fast and targeted intervention in a SIEM environtment is only possible with an immense amount of experience and considerable time.

Conclusion

Machine learning and artificial intelligence will have a significant impact on all areas of life and work. IT is no exception. Modern monitoring solutions such as NetEye from Würth Phoenix make use of these technologies to make the work of IT administrators easier. Without comprehensive machine support, it is simply not possible to meet the current and future requirements that companies place on IT. As of today, these systems are able to support root cause analysis by filtering and evaluating the available information. We can assume that semi-autonomous monitoring solutions will not be long in coming. What is certain, however, is that AI and AIOps cannot replace conventional Application Performance Monitoring (APM). Rather, AIOps is a powerful tool that will help IT staff in their daily work, allowing them to identify and address problems more quickly and in a more targeted manner. Their experience will remain indispensable for a long time to come.

Artificial Intelligence and Machine Learning

The terms artificial intelligence (AI), machine learning (ML) and deep learning (DL) are often used synonymously. In reality, they are different sides of the same coin: machine learning refers to the mathematical methodology of extracting information from existing data. For example, that a server has unusual load peaks. What data is processed with which algorithm is decided by the user during implementation. The AI is the application of the generated information to making decisions. In the case of load peaks, for example, this could be an automatic alarm to administrators. In contrast to ML, DL uses a neural network, which decides which information is passed on and how it is weighted. DL acts more like the human brain and requires a significant amount of computing capacity: The program AlphaGo, for instance, with its celebrated, sensational success in the game Go in 2017, was based on a computer network with over 1000 CPUs.