NetEye Automatic Provisioning in vSphere – Part 1

Deploying a Single Instance

As you probably already know, here at Würth Phoenix we love Ansible. Our journey started by using Terraform for simple automation tasks like generating a virtual machine in vSphere starting from an existing template, only to switch to Ansible later. Ansible is now our everyday companion for automation.

As mentioned earlier, we regularly use Ansible, combined with packer, to build and test a new NetEye ISO every night. Now, by leveraging that, we created a series of playbooks and roles so that we can create and deploy NetEye instances and clusters on our vSphere installation.

VSphere is, next to docker, one of the most important parts of our infrastructure – we run many, many different virtual machines on it. So, as you can imagine, we needed an easy, automatic way to deploy NetEye on it, both as a single instance and as a cluster configuration.

We started writing some roles and playbooks so Ansible could do the heavy lifting for us, while we would have more time to focus on feature development.

The first part that we had to tackle was the actual creation of the virtual machines on vSphere (helpfully, Ansible provides a ton of modules to interact with their API) and the related specific configurations required to run NetEye properly.

The next step was to actually install NetEye on the newly created Virtual Machine starting from the ISO, perform the updates, the configuration and the installation of all the various components.

The most annoying thing about this part was dealing with a root user with an (intentionally) expired password that must be reset immediately. In bash, we would have used ‘expect’ to temporarily change the password and be able to access the instance via ssh, so we checked and.. yes! Ansible has an expect module that saved the day.

Now that we could create a working instance on-demand, we needed to manage it in order to integrate it into some workflows (and AWX).

We wanted to be able to create and restore snapshots, and to terminate them programmatically when needed.

To do this we implemented a “docker-like” suffix for the instances name to avoid any clashes and wrote all the instance info into an inventory, managed with a git repository (still in progress) and, subsequently, the roles that we needed to perform those kinds of tasks.

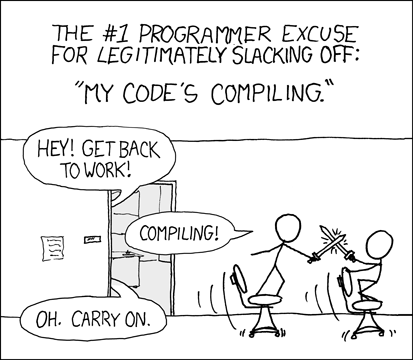

Now Würth Phoenix R&D engineers are able to spawn custom NetEye Virtual Machines with a single click while watching the code compile continuing to develop.

Thanks for reading. In the second part, we will describe how we automated the creation of a NetEye cluster, starting from the provisioner presented here.